Production Meteor and Node, Using Docker, Part VII

Backups! (Boring, no longer.)

By now, you've experienced the power of Docker links several times. Wasn’t that cross-cloud autoconfigured load balancing pretty rad?

Well, there’s actually yet another practical use for it: database backups.

Docker Cloud has a couple containers to help you here. Let's take a look at those before I show you something even cooler that we've built.

Project Ricochet is a full-service digital agency specializing in Open Source & Docker.

Is there something we can help you or your team out with?

Set up MySQL Databases for Automatic Backups

(Note: You can do the same for Mongo. The approach is similar though, I'm only covering MySQL in this post.)

Some context: When using servers, you normally set up a cronjob or similar in order to achieve the same result. With Docker, you use a separate container linked to your MySQL container. That separate container can access the databases in your MySQL container and back them up.

The container to use for backups is here. Setting it up is straightforward. You can pretty much just reuse your MySQL credentials. Make sure, of course, that your MySQL container has a user that the backup container can connect to.

For example, "root @ localhost" won't work, but "backup@-DOCKER-CLOUD-CONTAINER-HOSTNAME-" will.

Useful configuration options:

CRON_TIME: Specify a schedule, cron-style, for how often to take backups.

MAX_BACKUPS: Specify how many backups to preserve. This is useful for saving space.

How to Preserve Your Backups:

Unfortunately, Docker has no good way to preserve your backups. Just never delete your backup container!

Ha! Just kidding :) True to form, this is also straightforward: Use Dockup and sync your backup volume to Amazon S3.

This one requires us to use volumes from another Docker container, so let's look at how to do that.

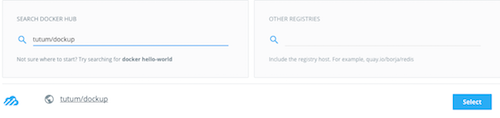

- Create the service as usual:

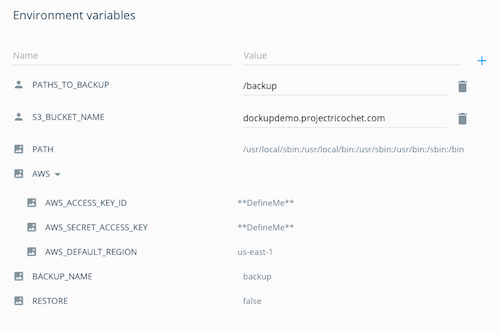

- Configure it as needed. Be sure to override the environment variables needed for S3.

In particular, specify PATHS_TO_BACKUP as /backup (which is what tutum/mysql-backup uses as its volume name). - This is fairly critical: Add Volumes From your mysql-backup instance.

- Create the service and it will do its thing.

Or just do it all at once!

Here’s the fun part. We've created a public Docker container that combines mysql-backup and dockup into one. You can use that instead of two separate containers. It’s located here.

The container combines the environment variables from the containers above. We also have one for Mongo.

Both of our combo containers will back up and sync according to the CRON_TIME you've set up, just like the mysql-backup and mongo-backup containers they're based on.

Well, I certainly hope you’ve enjoyed this series as much as I have in bringing it to you. Truth be told, I was having so much fun — it was hard to contain myself! (Haha.)

So, why don’t we keep the conversation going? I would love to hear how you fared. Were any of the steps more difficult than anticipated? Could I have spelled out something more clearly or provided more context? Any interesting new discoveries?

If we identify anything that can be improved, we’ll update the post and/or series for you. Until then, happy dockering!

Click here for the previous installment in the series.

Curious about how much it might cost to get help from an agency that specializes in Docker?

We're happy to provide a free estimate!